Kubernetes Secrets is not actually a secret ;)

Sometimes, Kubernetes Cluster needs access to sensitive information. To make the secret available for the containerized application, #Kubernetes has a dedicated API resource object called Secret. This secret can be accessed via volume mount or environment variable.

These secret objects are usually stored in the distributed state database called etcd in Kubernetes. As it is encoded only with Base64, the access to etcd will expose the secrets stored in the etcd. We all know the fact that Base64 is not at all secure as anyone can decode it just like that.

So, How to enable restrictions over our secret file in Kubernetes?

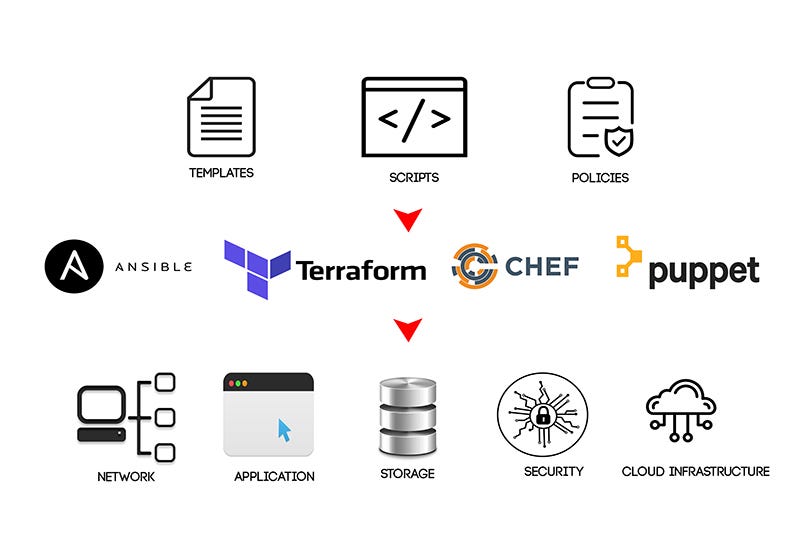

Kubernetes External Secrets let us rely on third-party systems like HashiCorp Vault, AWS Secrets Manager, Azure Key Vault, etc to enable REAL SECRETS. #HashiCorp Vault is something of my interest here. So let's see more about HashiCorp Vault here.

It has two important components like Authentication Methods and Secrets Engines. Kubernetes External Secrets can authenticate Kubernetes service accounts with the help of Vault. This is created and used independently of the vendors (May be #AWS, #Azure , #GCP..)

===== Interesting features of #HashiCorpVault =====

Centralized Storage Of All Secrets -> Imagine having 100+ Services, Vault is your savior.

RBAC (Role-Based Access Control) -> Enable Read/ Write permission for the users over secrets?

Audits -> Who created? How many times are secrets accessed? Etc

Encrypted Secrets -> No more plain texts or bad encryptions

Dynamic Secrets -> Create dynamic secrets based on the requirement and calculated Time To Live (TTL) so possibility of less breach.

Password Rotation -> You wanna rotate secrets every 30 days? Simple with Vault.

Encryption As Service -> Flexible APIs for encryption

High Availability ->Vault supports multi-server mode for HA

When I say, What an awesome product it is! Experts warn me that the Complex Configuration of Hashicorp #Vault is the real trap.

Maybe, Its time to get some real hands on. Will make a post some other day about my experience.

Does Hashicorp Vault Configuration is that complex? Are you someone who deals with this configuration conundrum in your everyday job? Happy to know some of your interesting critical configuration/troubleshooting challenges/experiences in the comments below.